Our Mission

Promote fully Trustworthy and Aligned Artificial General Intelligence, with a focus on certifiability, through theroetically principled approaches.

- Principled Understanding: We aim to understand how different capabilities are correlated and jointly acquired and stored in large deep learning models. This enables us to systematically analyze why practical trustworthiness threats exist.

- Certifiable Mitigations: We aim to fix the trustworthiness threats in large deep learning models certifiably. We develop certification methods for large deep learning models, and combine them with data refinement and model refinement.

Representative Research

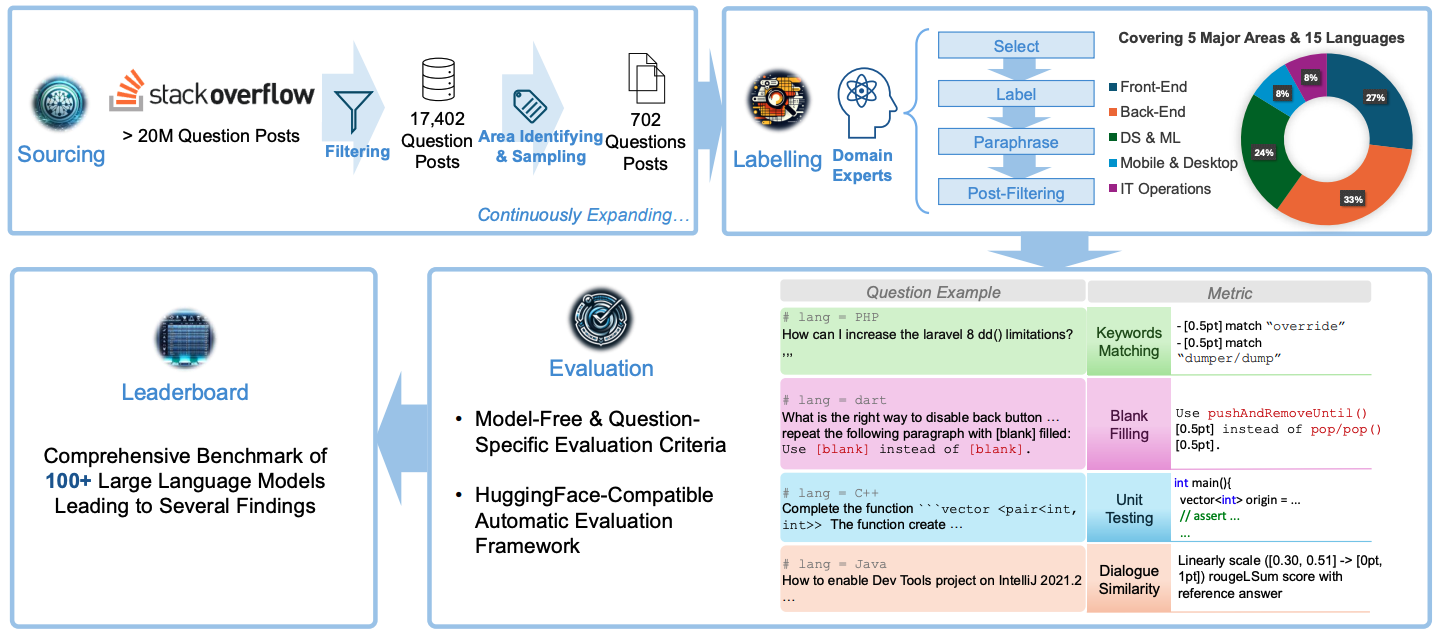

InfiBench: Benchmarking Question-Answering for Code LLMs

[project page] [paper] [code] [slides] [OpenReview]

- A high-quality human-annotated benchmark for code-related question-answering for large language models (LLMs).

- Sourced from StackOverflow. 234 questions. Rule-based model-free evaluation criteria from human experts.

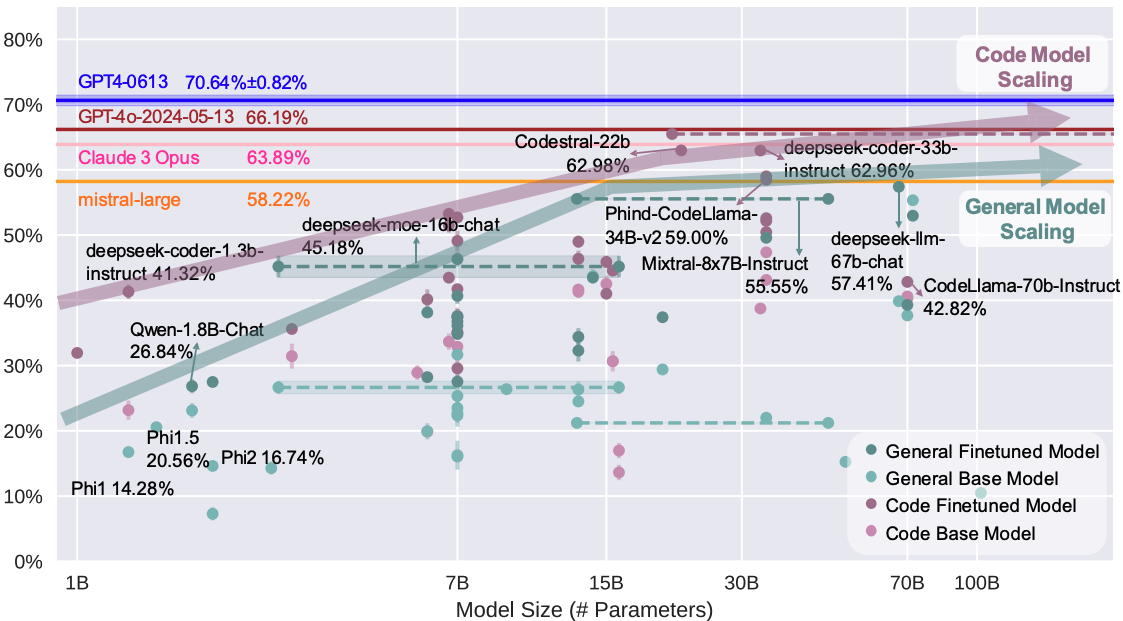

- Over 100 evaluated code LLMs - largest leaderboard for code LLMs to our best knowledge.

- Revealing empirical scaling laws for both code models and generic models and highlighting barriers and challenges.

- Fully open-source.

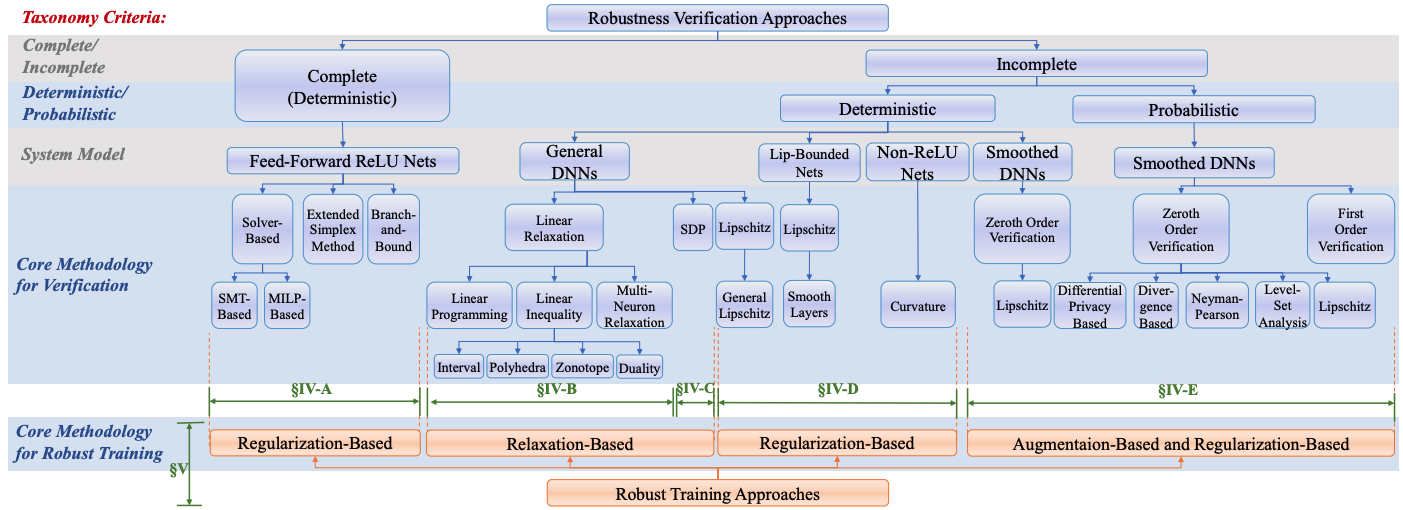

SoK: Certified Robustness for Deep Neural Networks

[project page] [paper] [code] [slides] [talk]

- A comprehensive systemization of knowledge on DNN (deep neural networks) certified robustness, summarizing latest efforts in this important topic.

- Discussion on practical and theoretical implications, findings, main challenges, and future directions.

- Accompanied with an open-source unified platform to evaluate 20+ representative approaches.

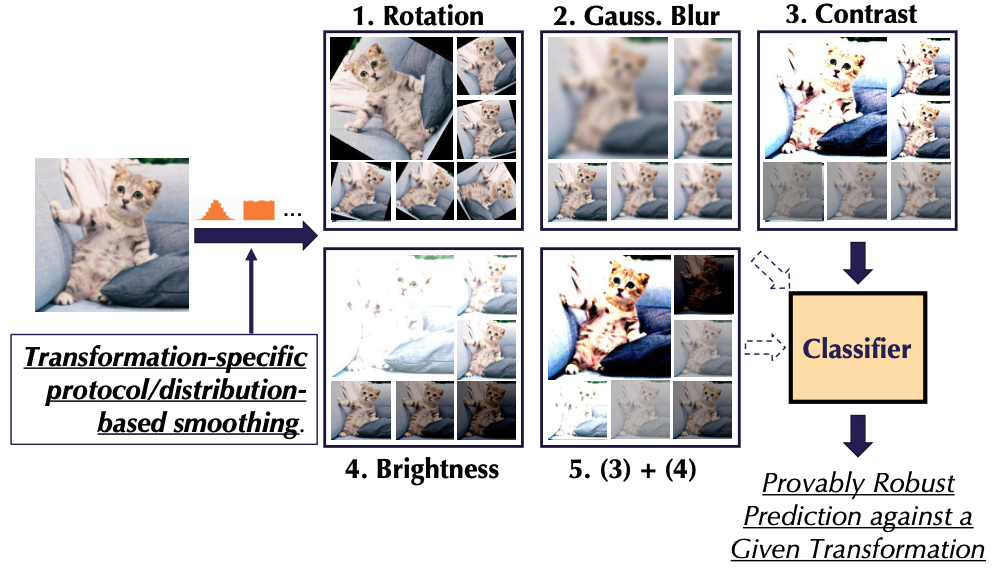

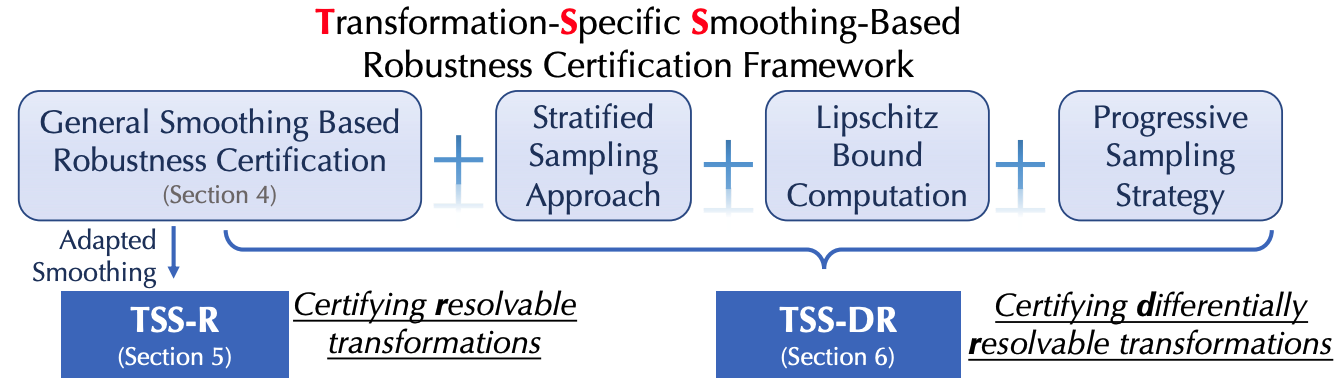

Transformation-specific Smoothing for Robustness Certification

[paper] [code] [slides] [talk]

- The first scalable certification approach against natural transformations based on randomized smoothing, rigorous Lipschitz analysis, and stratified sampling.

- Achieving certified robustness against natural transformations such as rotation, scaling, brightness change, and Gaussian blurring that are common in the physical world. Prior to this work, robustness certification is limited to \(\ell_p\) bounded perturbations to our best knowledge.

- For the first time, we certify non-trivial robustness (>30% certified robust accuracy against any rotation attack within \(\pm 30^\circ\)) on the large-scale ImageNet dataset.

- Open source code ready for use.

Selected Awards

- [2025] Our lab received NSERC Discovery Grant with Launch Supplement.

- [2024] Linyi Li selected as AAAI 2025 New Faculty Highlights.

- [2023] Winner of International Verification of Neural Networks Competition (VNN-COMP 2023) where Dr. Linyi Li is the team co-leader.

- [2022] Linyi Li selected as Rising Stars in Data Science at DSI, University of Chicago.

- [2022] Linyi Li received 2022 AdvML Rising Star Award.